环境配置

我习惯使用 Anaconda,这应该是配置开发环境最简单且最方便的方法之一了。环境可以在 Environments 里直接新建一个,接着安装相应的包,安装过程中,请留意选择 GPU 的版本,例如:

tensorflow-gpukeras-gpu

设备检测

安装完成后进入环境,尝试打印出设备看看,检查环境是否顺利配置:

1 | gpus = tf.config.experimental.list_physical_devices('GPU') |

如果顺利,应该会打印类似如下的信息:

[PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

可以看到,Tensorflow 已经能检测到 GPU 了,比如我就只有一块。

开启训练

我随便找了个 CNN 的网络,打算做个简单的测试。但在开启模型训练之后,程序就突然报错:

UnknownError: Failed to get convolution algorithm. This is probably because cuDNN failed to initialize, so try looking to see if a warning log message was printed above. [[node sequential/conv2d/Conv2D (defined at C:\path_of_envs\lib\site-packages\tensorflow_core\python\framework\ops.py:1751) ]] [Op:__inference_distributed_function_985]Function call stack:distributed_function

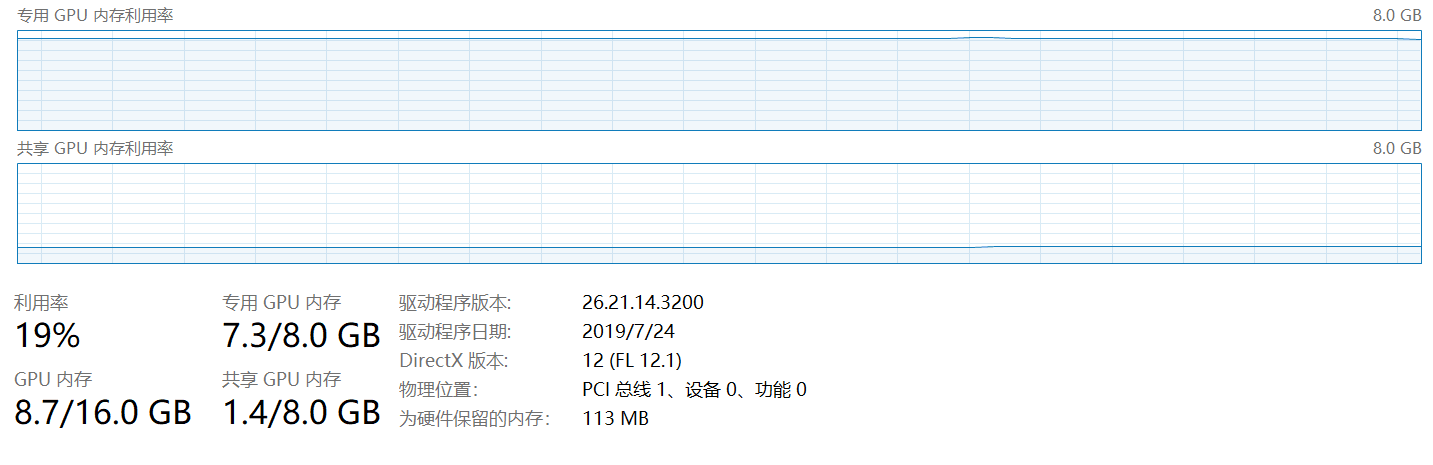

在这过程中,可以通过任务管理器留意到,当程序执行到定义网络结构的部分时,显存会突然开始飙升,直至几乎吃满。怀疑是不是显存炸了所以无法进行训练,毕竟已经有数据顺利跑了一部分了。

根据 Tensorflow 的官方文档1,它是这样描述的:

By default, TensorFlow maps nearly all of the GPU memory of all GPUs (subject to CUDA_VISIBLE_DEVICES) visible to the process. This is done to more efficiently use the relatively precious GPU memory resources on the devices by reducing memory fragmentation.

……

In some cases it is desirable for the process to only allocate a subset of the available memory, or to only grow the memory usage as is needed by the process. TensorFlow provides two methods to control this.

The first option is to turn on memory growth by calling tf.config.experimental.set_memory_growth, which attempts to allocate only as much GPU memory as needed for the runtime allocations

……

也就是说,默认情况下,Tensorflow 会把所有显卡的显存都吃掉,减少碎片从而提高效率。显然我的鸡踢叉 1080 根本经不起这样折腾,所以得靠 tf.config.experimental.set_memory_growth 进行按需分配:

1 | gpus = tf.config.experimental.list_physical_devices('GPU') |

可以把上面这段代码放在 import 部分的下面。如果你对显存大小的分配有更严格的要求,可以使用:

1 | tf.config.experimental.set_virtual_device_configuration( |

配置完成后,尝试运行~

[============================================================================================================>哇~~~~~~速度简直了!